Data Science in Astrophysics

The summer after junior year, I was advised to do something that would look good on a college application. After researching programs in my area, I found the Science Internship Program (SIP) through UCSC, which described itself as an eight week opportunity for select participants to gain real research experience under the mentorship of UCSC faculty. After applying, I was accepted into an astrophysics project under the mentorship of Dr Olivier Hervet. My task was to create a tool that could automatically obtain, process, display, and store astrophysical data.

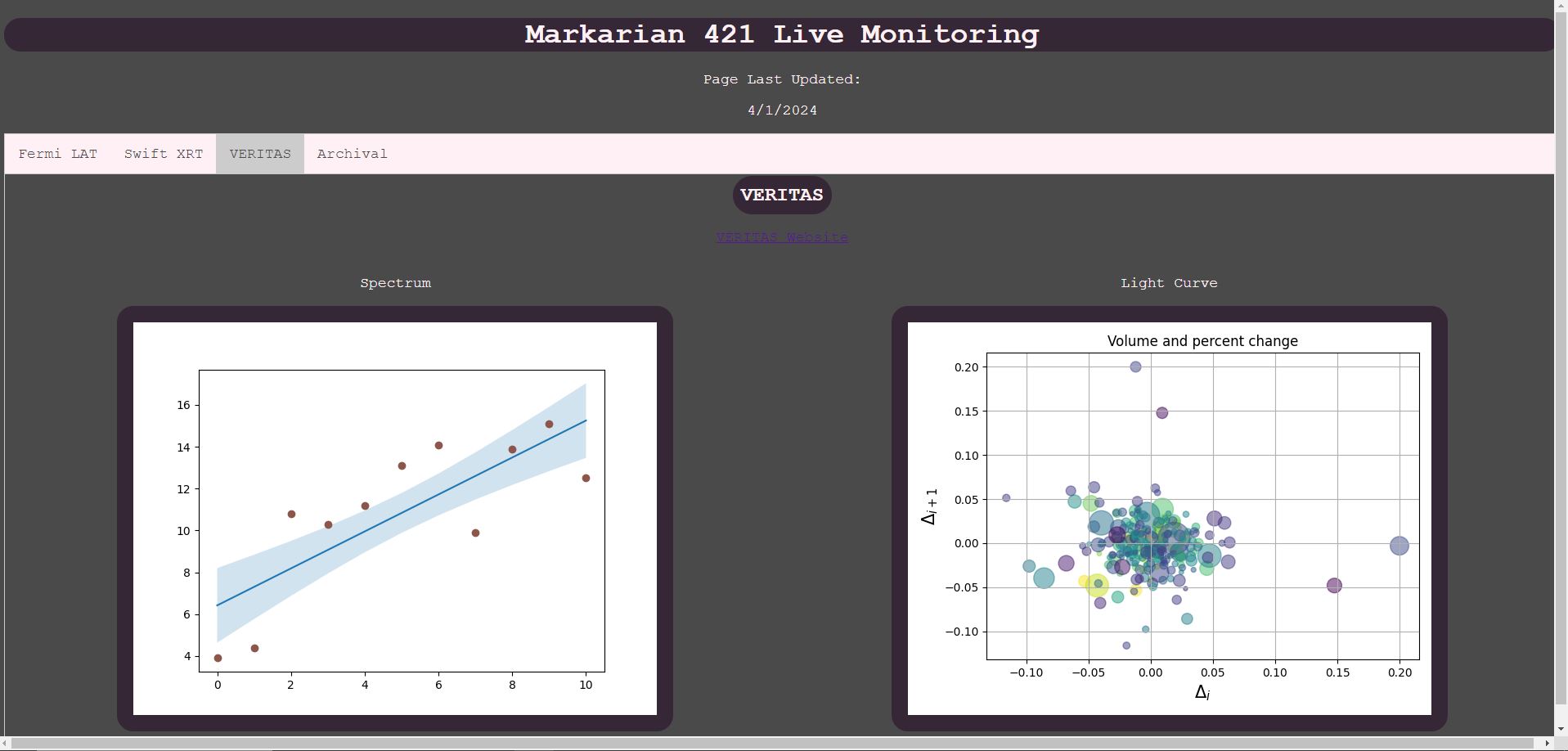

Going into this project, I had very minimal programming experience, no knowledge of data science or statistics, and no knowledge of the scientific context for my data. Over the course of the eight week program, I taught myself how to program, made a series of python scripts that could be executed in order to accomplish the task, and learned enough of the science behind the Active Galactic Nuclei Markarian 421, Cherenkov Radiation, and the VERITAS telescope array in Arizona to present my tool to the UCSC Department of Astrophysics.

However, my code was not robust enough to handle all the possible situations that could come up during the process. It was full of architectural and design mistakes, as well as many bugs. While I had technically written code that could accomplish the given task, the use of the tool couldn’t be encapsulated in a shell script, run daily, and expected to work. One major problem was that since I had a seperate python script for each step of the proccess, I managed state by reading, writing, and editing .csv files. While the program was over, I volunteered my time through the rest of my senior year to improve my application such that it was actually useful.

In the second version of the application, I drew on all of my prior experience, as well as the knowledge I was gaining in the Introductory and AP Computer Science classes I was taking. I rebuilt the application from the ground up, using a much more modular, flexible, design. By having a single program, I was able to manage state in RAM instead of on the HDD, which made everything significantly easier and faster. Furthermore, I rewrote my algorithms to adhere to fundamental programming ideas and went deeper into the pandas library to write code that was inline with how it was supposed to be used instead of scrappy, hacked solutions. After another ten months of work, I produced a tool that was robust, flexible, easily debuggable, and could be used with a single shell command.

Overall, I grew technically far beyond my AP Computer Science class, learning a variety of industry standard tools, as well as what it takes to build real software. The first great challenge I faced was that since I started with almost zero knowledge, my early design decisions were terrible and caused a lot of pain. Later in the project, I had to deal with how the in house tooling used to analyze the electrical data from the telescope was not designed to be used by a computer. Simple manual tasks like “wait for the analysis to finish and see if it worked”, were complicated to automate. My program had repeatedly search for various output files, as well as read and parse the autogenerated plaintext output. After gathering information like a human, it finally had to make a decision about the best way to proceed. I wanted my program to be robust enough that no matter what happened with the code I didn’t write running on hardware I didn’t control, my code would at least fail cleanly without crashing or corrupting existing data.